BroPikapi

Member

Good night everyone.

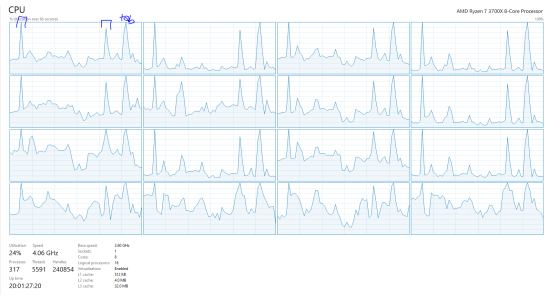

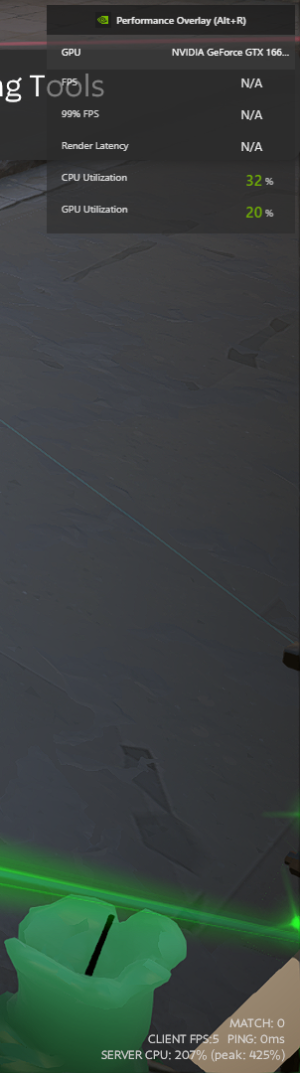

I found a bug where the FPS counter gets divided by the host_timescale and the CPU usage counter gets multiplied by it.

Example:

Lets see we place host_timescale 5 on a local server (for any purpose), we can see the game at 60fps (12fps in host scale), so, apparently it uses the host scale to calculate client's FPS, so we would be seeing 12FPS on the botton right corner.

also server CPU would be multiplied by host_timescale value, so using the same example host_timescale to 5, if we see 50% CPU usage on an external benchmarker (I used shadowplay), we would be seeing around 50*5=250% CPU usage

Proof:

(Uso de CPU=CPU usage)

I will be reading answers and adding valuable information regarding this bug, i invite the community to replicate this bug and send results.

I found a bug where the FPS counter gets divided by the host_timescale and the CPU usage counter gets multiplied by it.

Example:

Lets see we place host_timescale 5 on a local server (for any purpose), we can see the game at 60fps (12fps in host scale), so, apparently it uses the host scale to calculate client's FPS, so we would be seeing 12FPS on the botton right corner.

also server CPU would be multiplied by host_timescale value, so using the same example host_timescale to 5, if we see 50% CPU usage on an external benchmarker (I used shadowplay), we would be seeing around 50*5=250% CPU usage

Proof:

(Uso de CPU=CPU usage)

I will be reading answers and adding valuable information regarding this bug, i invite the community to replicate this bug and send results.